11 nov. 2024

How GIGAPOD Provides a One-Stop Service, Accelerating a Comprehensive AI Revolution

GIGABYTE

Stand: B55

)

GIGABYTE introduces GIGAPOD, an advanced scaling AI supercomputing infrastructure solution designed to enhance modern AI applications, such as large language model (LLM) training and real-time inference. Built around powerful GPU servers, it incorporates accelerators: NVIDIA HGX™ H100 & H200, AMD Instinct™ MI325X & MI300X, or Intel® Gaudi® 3 AI accelerators. Utilizing GPU interconnects through NVIDIA® NVLink®, AMD Infinity Fabric™ Link, or RoCEv2, it combines the nodes in a cluster into a single computing unit via high-speed networking, significantly boosting the crucial high-speed parallel computing demand in AI applications.

From design, production, to deployment, GIGABYTE can manage it all with GIGAPOD because of its flexible and scalable architecture. It is designed to accommodate the explosive growth in AI training models, providing a one-stop solution for transforming traditional data centers into large-scale AI cloud service providers. Leveraging GIGABYTE's expertise in hardware and strong partnerships with leading upstream GPU manufacturers not only ensures a smooth AI supercomputer deployment, but also provides users with reliable AI productivity.

From design, production, to deployment, GIGABYTE can manage it all with GIGAPOD because of its flexible and scalable architecture. It is designed to accommodate the explosive growth in AI training models, providing a one-stop solution for transforming traditional data centers into large-scale AI cloud service providers. Leveraging GIGABYTE's expertise in hardware and strong partnerships with leading upstream GPU manufacturers not only ensures a smooth AI supercomputer deployment, but also provides users with reliable AI productivity.

Challenges in Modern Computing Architectures

In the early days of GPU applications and AI development, when the computing requirements were relatively low and the interconnect technology was not yet mature, GPU computing primarily ran on a simple single-server architecture. However, as the scale of training models increased, the importance of multi-GPU and multi-node architectures became more apparent, especially for training LLMs with hundreds of billions of parameters. The GPU is important, but the cluster computing interconnect cannot be overlooked as it can significantly reduce AI training times and has become a vital component for large-scale computing centers.

When advanced enterprises build ideal AI application solutions, they typically face three primary requirements during the initial hardware deployment:

While discussions about data center construction often focus on the number of GPUs and computing power, without a well-established power supply and cooling system, the GPUs in the server room cannot realize their potential. Additionally, a high-speed networking architecture is a must as it plays a crucial role in ensuring that each computing node can communicate in real time, enabling fast GPU-to-GPU communication to handle the exponential growth in data.

To overcome the challenges faced by modern data centers, the following sections will detail why GIGAPOD is the best solution for building AI data centers today.

When advanced enterprises build ideal AI application solutions, they typically face three primary requirements during the initial hardware deployment:

- Powerful Computing: GPU nodes can compute in tandem, enabling them to efficiently perform parallel processing tasks such as matrix operations during AI training and simulations.

- Systematic Hardware Deployment: Data center deployment requires meticulous planning for key aspects such as data center power, floor layout, rack configuration, and thermal management, ensuring complete system hardware integration.

- Uninterrupted High-Speed Network Architecture: A high-speed network topology provides high bandwidth, low-latency network interconnections to speed up data transfer and enhance system performance.

While discussions about data center construction often focus on the number of GPUs and computing power, without a well-established power supply and cooling system, the GPUs in the server room cannot realize their potential. Additionally, a high-speed networking architecture is a must as it plays a crucial role in ensuring that each computing node can communicate in real time, enabling fast GPU-to-GPU communication to handle the exponential growth in data.

To overcome the challenges faced by modern data centers, the following sections will detail why GIGAPOD is the best solution for building AI data centers today.

Optimized Hardware Configuration

A basic GIGAPOD configuration consists of 32x GPU servers, each equipped with 8x GPUs, providing a total of 256x interconnected GPUs. Additionally, a dedicated rack is required to house network switches and storage servers.

Figure 1: GIGABYTE G593 Series Server

Below is the configuration/specification of the GIGABYTE G593 series server:

All server models in the G593 series support 8-GPU baseboards and dual CPUs. In parallel computing workloads, the server primarily relies on the GPU, while complex linear processing tasks are handled by the CPU. This workload distribution is ideal for AI training applications, and users can choose their preferred CPU platform from either AMD or Intel.

- CPU: Dual 4th/5th Generation Intel® Xeon® Scalable Processors or

AMD EPYC™ 9005/9004 Series Processors - GPU: NVIDIA HGX™ H100/H200 GPU or

OAM-compliant accelerators: AMD Instinct™ MI300 Series and Intel® Gaudi® 3 AI - Memory: 24x DIMMs (AMD EPYC) or 32x DIMMs (Intel Xeon)

- Storage: 8x 2.5” Gen5 NVMe/SATA/SAS-4 hot-swap drives

- PCIe slots: 4x FHHL and 8x low-profile PCIe Gen5 x16 slots

- Power: 4+2 3000W 80 PLUS Titanium redundant power supplies

All server models in the G593 series support 8-GPU baseboards and dual CPUs. In parallel computing workloads, the server primarily relies on the GPU, while complex linear processing tasks are handled by the CPU. This workload distribution is ideal for AI training applications, and users can choose their preferred CPU platform from either AMD or Intel.

Unique Advantages of the GIGABYTE G593 Series:

- Industry-leading high-density design: The G593 series offers the highest density 8-GPU air-cooled server on the market. Compared to the larger, industry-standard 7U/8U designs, GIGABYTE achieves the same compute performance in a more compact 5U chassis.

- Front-mounted GPU tray: The removable front GPU tray allows for easier maintenance and access of the GPU modules.

- Advanced cooling technology: Supports Direct Liquid Cooling (DLC) for CPU, GPU, and NVSwitch to reduce energy consumption and achieve a lower PUE (Power Usage Effectiveness).

- 1-to-1 balanced design: Each PCIe switch connects to the same number of GPUs, storage devices, and PCIe slots, making it ideal for GPU RDMA and direct data access from NVMe drives.

- Six CRPS redundant power supplies: Features a redundant power design, with a 3600W PSU option to achieve N+N redundancy.

When building a performance-optimized AI computing solution, avoiding bandwidth bottlenecks is crucial. In high-performance AI systems or clusters, the ideal scenario is for all data transfer to use the GPU's high-bandwidth memory, avoiding data transfers through the processor's PCIe lanes. To solve the bandwidth performance bottleneck, GIGABYTE integrates four Broadcom PCIe switches on the system board to allow GPUs to access data through Remote Direct Memory Access (RDMA) without routing through the CPU. For accelerated networking, each GPU connects to NVIDIA® ConnectX®-7, which uses InfiniBand or Ethernet networking at up to 400Gb/s.

Additionally, PCIe switches help with signal expansion, allowing for greater I/O connectivity by efficiently sharing PCIe lanes beyond those devoted to the GPU modules. GIGABYTE’s design includes four additional PCIe x16 slots, often used with NVIDIA BlueField®-3 DPUs for networking, security, and data processing in high-performance clusters.

Scalable Network Architecture

AI computing often involves processing large datasets across multiple distributed nodes. In order to realize the true potential of a cluster, the network plays a key role in enabling high data transfer speeds across nodes, ensuring synchronization, and maintaining data consistency across the entire system.

During large language model training, the data-intensive workload is handled by the eight GPUs within each server. These GPUs can exchange data at speeds of up to 900GB/s using high-speed interconnect technology, maximizing computational efficiency. Data exchange with other GPU nodes in the cluster is handled through a network architecture of multiple switches, commonly using NVIDIA Quantum-2 QM9700 switches with 400Gb/s NDR InfiniBand.

During large language model training, the data-intensive workload is handled by the eight GPUs within each server. These GPUs can exchange data at speeds of up to 900GB/s using high-speed interconnect technology, maximizing computational efficiency. Data exchange with other GPU nodes in the cluster is handled through a network architecture of multiple switches, commonly using NVIDIA Quantum-2 QM9700 switches with 400Gb/s NDR InfiniBand.

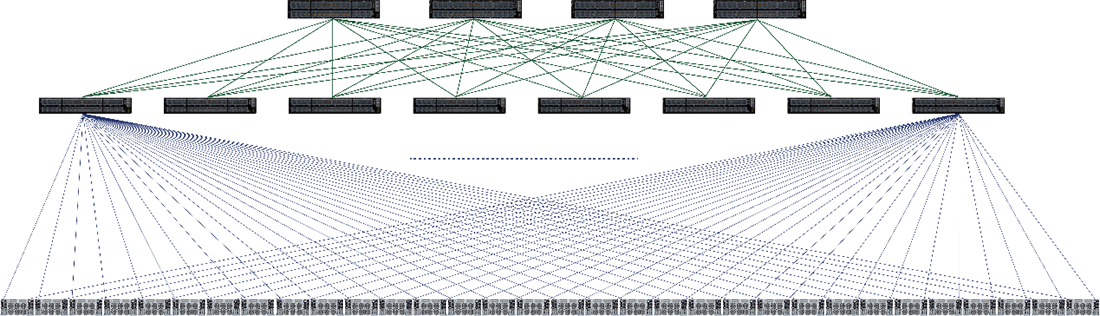

GIGAPOD's Network Topology: Non-Blocking Fat-Tree Topology

Non-Blocking: Any two points can communicate without interference or congestion from other traffic. In a non-blocking network, sufficient bandwidth is always available, ensuring that all data can be transmitted simultaneously without introducing delay or bottlenecks.

Fat-Tree: This topology leverages a leaf-spine network concept. Leaf switches connect servers, while spine switches form the network's core. Each leaf switch connects to each spine switch in the network, providing multiple paths to prevent congestion and ensure high performance and minimal latency. The ‘fatter” higher levels towards at the top of the tree have a higher bandwidth, avoiding performance bottlenecks, making GIGAPOD ideal for scalable, high-traffic environments.

With these two concepts in mind, we can dive into the GIGAPOD network architecture. In GIGAPOD, each GPU in a server is paired with an NIC card, creating 8 GPU-NIC pairs per server. Each GPU-NIC pair in a server is connected to a different leaf switch in the middle layer. For example, GPU-NIC pair #1 from GPU server #1 connects to Leaf Switch #1, and GPU-NIC pair #1 from GPU server #2 connects to the same Leaf Switch #1.

Next, the leaf and spine switches are connected to form a fat tree. This expansion to the top layer follows a similar concept to connecting servers to leaf switches. Ports from each leaf switch are evenly distributed among spine switches, forming a top layer network.

Fat-Tree: This topology leverages a leaf-spine network concept. Leaf switches connect servers, while spine switches form the network's core. Each leaf switch connects to each spine switch in the network, providing multiple paths to prevent congestion and ensure high performance and minimal latency. The ‘fatter” higher levels towards at the top of the tree have a higher bandwidth, avoiding performance bottlenecks, making GIGAPOD ideal for scalable, high-traffic environments.

With these two concepts in mind, we can dive into the GIGAPOD network architecture. In GIGAPOD, each GPU in a server is paired with an NIC card, creating 8 GPU-NIC pairs per server. Each GPU-NIC pair in a server is connected to a different leaf switch in the middle layer. For example, GPU-NIC pair #1 from GPU server #1 connects to Leaf Switch #1, and GPU-NIC pair #1 from GPU server #2 connects to the same Leaf Switch #1.

Next, the leaf and spine switches are connected to form a fat tree. This expansion to the top layer follows a similar concept to connecting servers to leaf switches. Ports from each leaf switch are evenly distributed among spine switches, forming a top layer network.

Figure 2: GIGAPOD’s Cluster Using Fat Tree Topology

In summary, a GIGAPOD scalable unit consists of 32x GPU servers and twelve switches, with four serving as spine switches and eight as leaf switches, all connected and managed through 256x NIC cards to orchestrate each GPU. Below is an example of the specifications:

- 4x NVIDIA Quantum-2 QM9700 Spine Switches (Top Layer) with NVIDIA MMA4Z00-NS 2x400Gb/s Twin-port OSFP Transceivers

- 8x NVIDIA Quantum-2 QM9700 Leaf Switches (Middle Layer) with NVIDIA MMA4Z00-NS 2x400Gb/s Twin-port OSFP Transceivers

- Each server has 8 NVIDIA ConnectX®-7 NICs (Bottom Layer) with NVIDIA MMA4Z00-NS400 400Gb/s Single-port OSFP Transceivers

- NVIDIA MPO-12/APC Passive Fiber Cables

Complete Rack-Level AI Solution

After introducing the system configuration and network topology, rack integration is the final step in deploying GIGAPOD. Along with the number of racks and node configurations, it's essential to consider an optimized cabling design to maximize its cost-effectiveness. Key factors to consider for rack integration include:

This comprehensive approach ensures that GIGAPOD delivers powerful and scalable AI solutions with efficient deployment and management.

- Optimizing cable length to prevent tangling and reduce excess length and unnecessary costs

- Maximizing usage of space to increase equipment density

- Improving cooling to enhance performance and ensure effective heat dissipation

- Streamlining installation and setup for simplified, efficient deployment

- Providing aftermarket services for ease of maintenance and scalability

This comprehensive approach ensures that GIGAPOD delivers powerful and scalable AI solutions with efficient deployment and management.

Figure 3: GIGAPOD with Liquid Cooling: 4 GPU Compute Racks

Figure 4: GIGAPOD with Air Cooling: 8 GPU Compute Racks

Efficient space utilization has always been a top priority in data center planning.With the continuous advancements in CPU and GPU technology, along with the global emphasis on green computing, thermal management has become a key factor in the design and infrastructure of data centers. For customers looking to maximize computing power within their existing data center space, GIGAPOD has a direct liquid cooling design that is the perfect solution. GIGABYTE uses an 8-GPU platform in a 4U chassis, where both CPUs and GPUs are equipped with DLC cold plates. Heat is effectively dissipated from the chips via passive liquid cooling, ensuring peak performance and energy efficiency. Additionally, by removing heat sinks, some fans, and implementing an optimized thermal design, more of the space is freed up. This allows GIGAPOD to have a configuration with half as many compute racks when compared to air cooling. Only four racks with liquid cooling can achieve the same performance as the original eight rack air cooling configuration, thus achieving maximum utilization of the data center space.

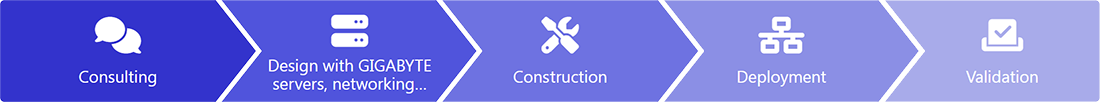

Comprehensive Deployment Process

A single GIGAPOD scalable unit with 32 GPU servers requires over 20,000 components to complete, so a highly systematic process is needed to ensure that each stage runs smoothly. From consultation to actual deployment, GIGABYTE utilizes a five-step process to ensure that GIGAPOD is successfully built and delivered from start to finish.

Figure 4: Deployment Process

The entire process involves countless detailed discussions, such as GIGAPOD’s power supply configuration. For the air-cooled version of GIGAPOD, each rack requires support for 50 kW of power for the IT hardware, including four 12 kW servers, switches, and other components. To address this, GIGABYTE selects the IEC60309 100A 380V power plug and provides a redundant design with two sets of PDUs (Power Distribution Units) per rack. In the liquid cooling solution, since the density inside the rack is doubled, the power requirement increases to 100 kW per rack, which uses a 2+2 PDU configuration. GIGAPOD also supports two types of power outlets, C19/C20 or Anderson, allowing customers to choose the solution that best fits their needs. Additionally, data center power requirements, such as the type of AC power input, may vary based on geographical location and other factors. So, the GIGABYTE team remains highly flexible, evaluating regional environmental conditions to provide the best possible solution.

AI-Driven Software and Hardware Integration

To support the complex needs of AI-driven enterprises, GIGAPOD is paired with the GIGAPOD Management Platform (GPM), offering a unified maintenance interface for devices across the cluster, including servers, networking, storage, power, and cooling. This allows businesses to perform large-scale monitoring, maintenance, and management of all IT hardware in clusters. GPM supports integration with NVIDIA AI Enterprise’s Base Command Manager (BCM) and Canonical’s Juju platform, and also features an automated deployment of task scheduling software like NVIDIA BCM SLURM and Canonical Charmed Kubernetes, giving users flexibility in managing GPU servers with different architectures. Through our collaboration with NVIDIA and Canonical, GIGABYTE combines advanced hardware with powerful software tools to deliver an end-to-end, scalable AI infrastructure management platform.

Another way to enhance the cluster is to use Myelintek’s MLSteam, which is an MLOps platform. Through MLSteam, AI R&D teams can focus more on their areas of expertise without spending additional time and costs involved in building development environments. It also allows for effective management of training data and models, including data annotation, model development, model serving, and model retraining, thereby realizing AI lifecycle management. MLSteam also supports NVIDIA AI Enterprise’s model category and NIM to meet various AI research and development needs. It allows for highly customized AI model development, including advanced features like Retrieval-Augmented Generation (RAG), while GPM and GIGABYTE’s high-performance GPU servers support various accelerators, fulfilling the software and hardware architecture requirements for AI R&D teams.

Another way to enhance the cluster is to use Myelintek’s MLSteam, which is an MLOps platform. Through MLSteam, AI R&D teams can focus more on their areas of expertise without spending additional time and costs involved in building development environments. It also allows for effective management of training data and models, including data annotation, model development, model serving, and model retraining, thereby realizing AI lifecycle management. MLSteam also supports NVIDIA AI Enterprise’s model category and NIM to meet various AI research and development needs. It allows for highly customized AI model development, including advanced features like Retrieval-Augmented Generation (RAG), while GPM and GIGABYTE’s high-performance GPU servers support various accelerators, fulfilling the software and hardware architecture requirements for AI R&D teams.

Conclusion

GIGABYTE’s AI data center supercomputing solution, GIGAPOD, not only excels in reliability, availability, and maintainability but also offers unparalleled flexibility. Whether it’s the choice of GPU, rack size, cooling solutions, or custom planning, GIGABYTE adapts to diverse IT infrastructure, hardware requirements, and data center sizes. With services ranging from L6 to L12, covering everything from power and cooling infrastructure design to hardware deployment, system optimization, and after-sales support, we ensure that our customers receive an end-to-end solution that fully meets their operational requirements and performance goals.

Loading

.jpg/fit-in/1280x9999/filters:no_upscale())

)

)

.png/fit-in/1280x9999/filters:no_upscale())

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

.png/fit-in/1280x9999/filters:no_upscale())

)

)

.png/fit-in/1280x9999/filters:no_upscale())

)

)

)

)

)

)

)

)

)

.jpg/fit-in/1280x9999/filters:no_upscale())

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)

)